System Architecture

General

All data written to Granite gets replicated across two LTO-9 tapes allowing for data recovery if a tape cartridge fails or is damaged. However it is worth noting that at present both copies are located in the same tape library in the NPCF facility.

The ScoutAM software allows section sizing media into chunks to mitigate writing small files to tape. While this helps the library handle smaller files, large files are strongly recommended. Quotas enforce a large average file size 100MB.

Tape Library

Granite is made up of a single Spectra TFinity Plus library running Specta’s LumOS library software. This 19-frame library is capable of holding over 300PB of replicated data, leveraging 20 LTO-9 tape drives to transfer data to/from thousands of LTO-9 (18TB) tapes.

Granite Servers

Five Dell Poweredge servers form Granite’s server infrastructure.

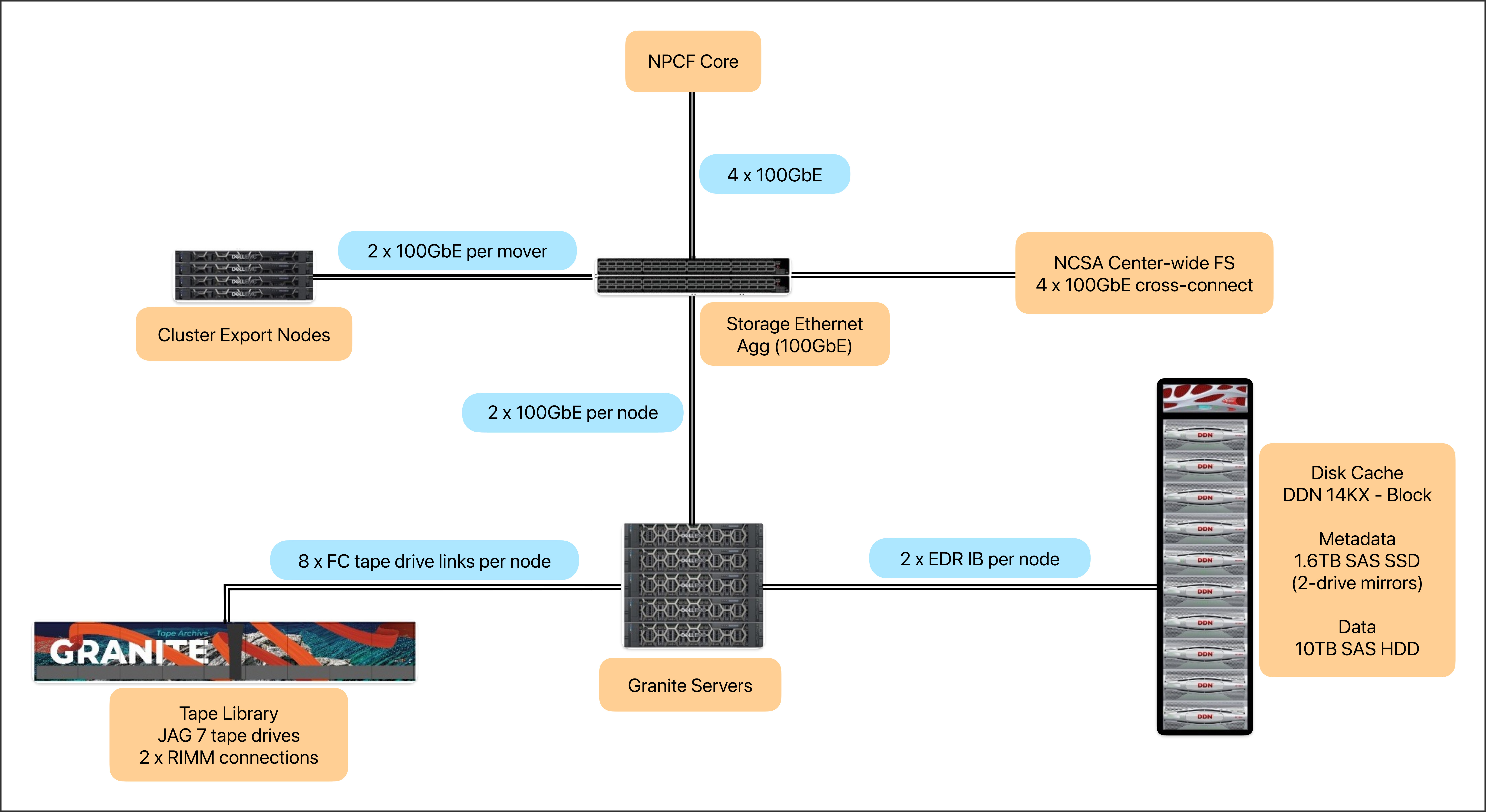

Each node connects via direct Fibre Channel (FC) connections to four tape interfaces on the tape library and connects to the disk cache via 100Gb Infiniband. Each node is also connected at 2 x 100GbE to the storage ethernet aggregation which allows 2 x 100GbE to the NPCF core network.

Disk Cache

The archive disk cache is where all data lands to be ingested or extracted from the archive. This is currently made up of a DDN SFA 14KX unit with a mix of SAS SSDs (metadata) and SAS HDDs (capacity), with an aggregated capacity of ~2PB.

System Architecture Diagram