Running Jobs

HAL uses Slurm for job management. For a complete guide to Slurm, go to the Slurm documentation. The following is simple examples with system-specific instructions.

HAL Slurm Wrapper Suite (Recommended)

Note

The Slurm Wrapper Suite (SWSuite) was designed with new Slurm users in mind and simplifies many aspects of job submission in favor of automation. For advanced use cases, the original Slurm commands are still available for use.

The HAL Slurm Wrapper Suite was designed to help users use HAL easily and efficiently. SWSuite:

Minimizes the required input options.

Is consistent with the original Slurm run-script format.

Submits a job to a suitable partition based on the number of GPUs needed (number of nodes for CPU partition).

Commands

The current version is swsuite-v0.4, and includes the following commands:

swrun: request resources to run interactive jobs (Slurm commandsrun).swrun -p <partition_name> -c <cpu_per_gpu> -t <walltime> -r <reservation_name>

<partition_name>: (required) cpun1, cpun2, cpun4, cpun8, gpux1, gpux2, gpux3, gpux4, gpux8, gpux12, gpux16.<cpu_per_gpu>: (optional) 16 cpus (default), range from 16 cpus to 40 cpus.<walltime>: (optional) 4 hours (default), range from 1 hour to 24 hours in integer format.<reservation_name>: (optional) reservation name granted to user.

Example (click to expand/collapse)

swrun -p gpux4 -c 40 -t 24 # requests a full node: 1x node, 4x gpus, 160x cpus, 24x hours

Warning

Using interactive jobs to run long-running scripts is not recommended.

If you are going to walk away from your computer while your script is running, consider submitting a batch job. Unattended interactive sessions can remain idle until they run out of walltime and thus block out resources from other users.

We will issue warnings when we find resource-heavy idle interactive sessions and repeated offenses may result in revocation of access rights.

swbatch: request resource to submit a batch script to Slurm (Slurm commandsbatch).swbatch <run_script>

<run_script>: (required) same as original Slurm batch.Within the

<run_script>:<job_name>: (optional) job name.<output_file>: (optional) output file name.<error_file>: (optional) error file name.<partition_name>: (required) cpun1, cpun2, cpun4, cpun8, gpux1, gpux2, gpux3, gpux4, gpux8, gpux12, gpux16.<cpu_per_gpu>: (optional) 16 cpus (default), range from 16 cpus to 40 cpus.<walltime>: (optional) 24 hours (default), range from 1 hour to 24 hours in integer format.<reservation_name>: (optional) reservation name granted to user.

Example (click to expand/collapse)

swbatch demo.swb

# demo.swb #!/bin/bash #SBATCH --job-name="demo" #SBATCH --output="demo.%j.%N.out" #SBATCH --error="demo.%j.%N.err" #SBATCH --partition=gpux1 #SBATCH --time=4 srun hostname

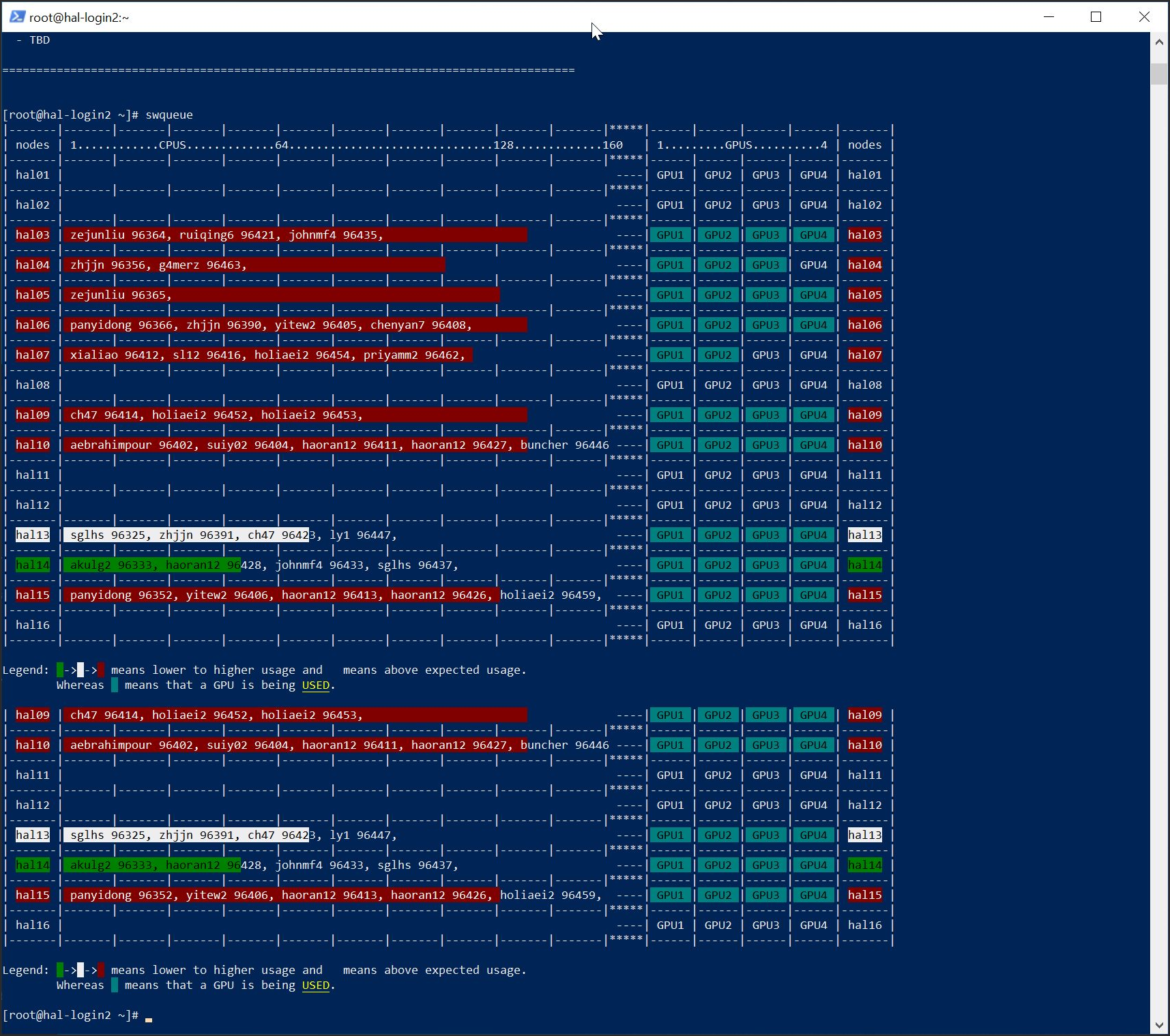

swqueue: check current running jobs and computational resource status (Slurm commandsqueue).swqueue

Usage

Monitor your usage and avoid occupying resources that you cannot make use of.

Many applications require some amount of modification to make use of more than one GPUs for computation.

Almost all programs require nontrivial optimizations to be able to run efficiently on more than one node (partitions gpux8 and larger).

New Job Queues (SWSuite only)

Under current policy, jobs requesting more than 5 nodes require a reservation. Otherwise, they will be held by the scheduler and will not execute.

The following partitions have:

normal priority

24 hour max walltime

no local scratch

Partition Name |

Nodes Allowed |

Min-Max CPUs Per Node Allowed |

Min-Max Mem Per Node Allowed |

GPU Allowed |

|---|---|---|---|---|

gpux1 |

1 |

16-40 |

19.2-48 GB |

1 |

gpux2 |

1 |

32-80 |

38.4-96 GB |

2 |

gpux3 |

1 |

48-120 |

57.6-144 GB |

3 |

gpux4 |

1 |

64-160 |

76.8-192 GB |

4 |

gpux8 |

2 |

64-160 |

76.8-192 GB |

8 |

gpux12 |

3 |

64-160 |

76.8-192 GB |

12 |

gpux16 |

4 |

64-160 |

76.8-192 GB |

16 |

cpun1 |

1 |

96-96 |

115.2-115.2 GB |

0 |

cpun2 |

2 |

96-96 |

115.2-115.2 GB |

0 |

cpun4 |

4 |

96-96 |

115.2-115.2 GB |

0 |

cpun8 |

8 |

96-96 |

115.2-115.2 GB |

0 |

cpun16 |

16 |

96-96 |

115.2-115.2 GB |

0 |

cpu_mini [1] |

1 |

8-8 |

9.6-9.6 GB |

0 |

SWSuite Example Job Scripts

Find these example job scripts in /opt/samples/runscripts and request adequate resources to run them.

Script Name |

Job Type |

Partition |

Walltime |

Nodes |

CPU |

GPU |

Memory |

|---|---|---|---|---|---|---|---|

|

interactive |

gpux1 |

24 hrs |

1 |

16 |

1 |

19.2 GB |

|

interactive |

gpux2 |

24 hrs |

1 |

32 |

2 |

38.4 GB |

|

batch |

gpux1 |

24 hrs |

1 |

16 |

1 |

19.2 GB |

|

batch |

gpux2 |

24 hrs |

1 |

32 |

2 |

38.4 GB |

|

batch |

gpux4 |

24 hrs |

1 |

64 |

4 |

76.8 GB |

|

batch |

gpux8 |

24 hrs |

2 |

128 |

8 |

153.6 GB |

|

batch |

gpux16 |

24 hrs |

4 |

256 |

16 |

153.6 GB |

Original Slurm

Available Queues

Name |

Priority |

Max Walltime |

Max Nodes |

Min/Max CPUs |

Min/Max RAM |

Min/Max GPUs |

Description |

|---|---|---|---|---|---|---|---|

cpu |

normal |

24 hrs |

16 |

1-96 |

1.2GB per CPU |

0 |

CPU-only jobs |

gpu |

normal |

24 hrs |

16 |

1-160 |

1.2GB per CPU |

0-64 |

Jobs utilizing GPUs |

debug |

high |

4 hrs |

1 |

1-160 |

1.2GB per CPU |

0-4 |

For single-node short jobs. |

Commands

srun: submit an interactive job.srun --partition=debug --pty --nodes=1 \ --ntasks-per-node=16 --cores-per-socket=4 \ --threads-per-core=4 --sockets-per-node=1 \ --mem-per-cpu=1200 --gres=gpu:v100:1 \ --time 01:30:00 --wait=0 \ --export=ALL /bin/bashsbatch: submit a batch job.sbatch [job_script]

squeue: check job status.

squeue # check all jobs from all users squeue -u [username] # check all jobs belong to username

scancel: cancel a running job.scancel [job_id] # cancel job with [job_id]

PBS Commands

Some PBS commands are supported by Slurm.

pbsnodes: check node status.pbsnodes

qstat: check job or queue status.qstat -f [job_number] # check job status

qstat # check queue status

qdel- delete a job.qdel [job_number]

Submit a batch job:

$ cat test.pbs #!/usr/bin/sh #PBS -N test #PBS -l nodes=1 #PBS -l walltime=10:00 hostname $ qsub test.pbs 107 $ cat test.pbs.o107 hal01.hal.ncsa.illinois.edu

Reasons a Pending Job Isn’t Running

Use the following command to get a list of your jobs (replace <username> with your username):

squeue -u <username>

The right column will contain a reason for each of the pending jobs.

Priority

There is at least one pending job with a higher priority than this job. The priority for a job depends on a couple of factors, the biggest of which is recent usage. You are most likely seeing this reason after running some combination of a large number of jobs, jobs using a lot of resources, or jobs that run for a long time. The recent usage factor slowly decays in a two-week period, which means any usage prior to two weeks before the job was submitted will not impact the priority of the job. You can check your recent HAL usage.

Jobs that are pending for this reason may remain pending for a long time if the recent usage factor has reduced your priority below most of the active users. If there is a sufficient difference between someone’s recent usage and yours, and the difference in the recent usage factor is large enough to exceed the waiting time factor, their job may receive a higher priority and run before your job, even if it is submitted after your job.

ReqNodeNotAvail

Some of the nodes specifically requested by the job aren’t available. The nodes could be unavailable for one of the following reasons:

Running jobs with a higher priority.

Reserved in a reservation.

Manually drained by an administrator for maintenance.

Unavailable due to some issues.

This job will run when all the requested nodes become available.

Resources

This job is at the front of the queue, but there are not enough resources for it to start running. This job will start running as soon as enough resources become available. The priority calculation favors large jobs, so when resources gradually become available, smaller jobs with a similar recent usage factor won’t run before this job and take away the available resource. Note that if someone has much lower recent usage than you do, their jobs can still run before your job, because the bonus from their recent usage factor can exceed the bonus from your job size factor.

AssocGrpGRES

This means you have reached the limit of resources that can be allocated to one user at any given time. There are three limits in place:

Maximum of 5 running jobs.

Maximum of 5 nodes running jobs.

Maximum of 16 GPUs running jobs.

This job will run as soon as some of your running jobs finish and free up the resources.

Reservation

This job is submitted to an inactive reservation. If the reservation is in the future, it will run when the reservation starts. If the reservation has ended, it will be stuck in the queue forever until it’s deleted.

Error: “sbatch: error: Batch job submission failed: Invalid account or account/partition combination specified”.

Your account has not been properly initialized. Try logging in to and out of hal-login2.ncsa.illinois.edu via SSH a few times.

ssh <username>@hal-login2.ncsa.illinois.edu

If it still isn’t working, contact an admin on Slack or submit a support request.