System Architecture

Backend

Harbor’s capacity comes from two Ceres DBox enclosures. Each enclosure contains 22 x 15.36TB QLC NVME drives and 8 x 800GB persistent memory devices. These enclosures present each drive as an NVMEoF target on every CNode in the Harbor system. Writes to Harbor land on the persistent memory devices and are aggregated before being flushed to the much larger QLC NVME tier.

Each DBox contains two dual-node DTrays which each consist of two Mellanox ConnectX-6 Data Processing Units (DPUs) where the DBox containers run. These enclosures have no other Intel, AMD, or ARM processors. I/O is driven by the DPUs on the Network Interface Cards (NICs), which are connected to Harbor’s backend 100GbE infrastructure.

Frontend

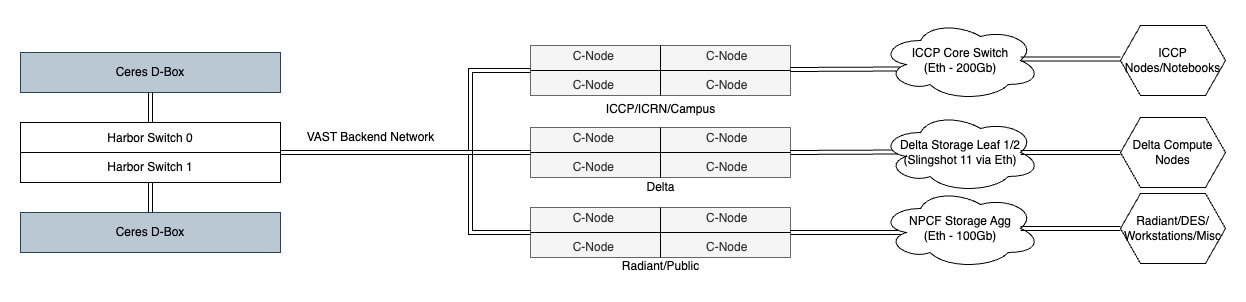

CBoxes bridge Harbor’s backend 100GbE fabric to a cluster’s high-speed network (HSN), enabling Harbor to connect to multiple cluster environments and form the file system. Major environments (such as the Delta Complex and Illinois Computes) have dedicated CBoxes, while smaller environments share a common “core” CBox.

These CBoxes are standard x86 servers packaged in a 4-nodes-in-2U configuration. Currently there are a total of 12 CNodes (3 x Boxes) that drive the file system. These machines serve Harbor’s I/O and host Virtual IP addresses (VIPs) that clients use to mount the Harbor file system.

System Architecture Diagram